Discrete neural algorithmic reasoning

Neural algorithmic reasoning The link has been copied to clipboard

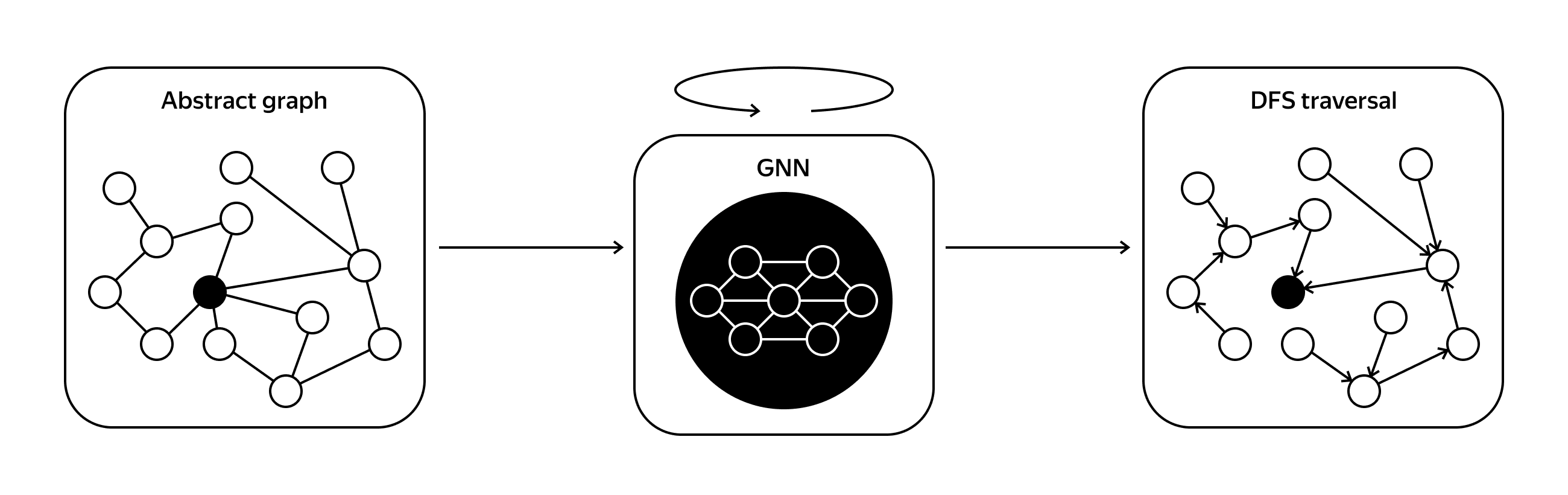

For the BFS problem, we are given a graph and a starting node. For each node, we predict its parent in the BFS tree.

Outline of our work The link has been copied to clipboard

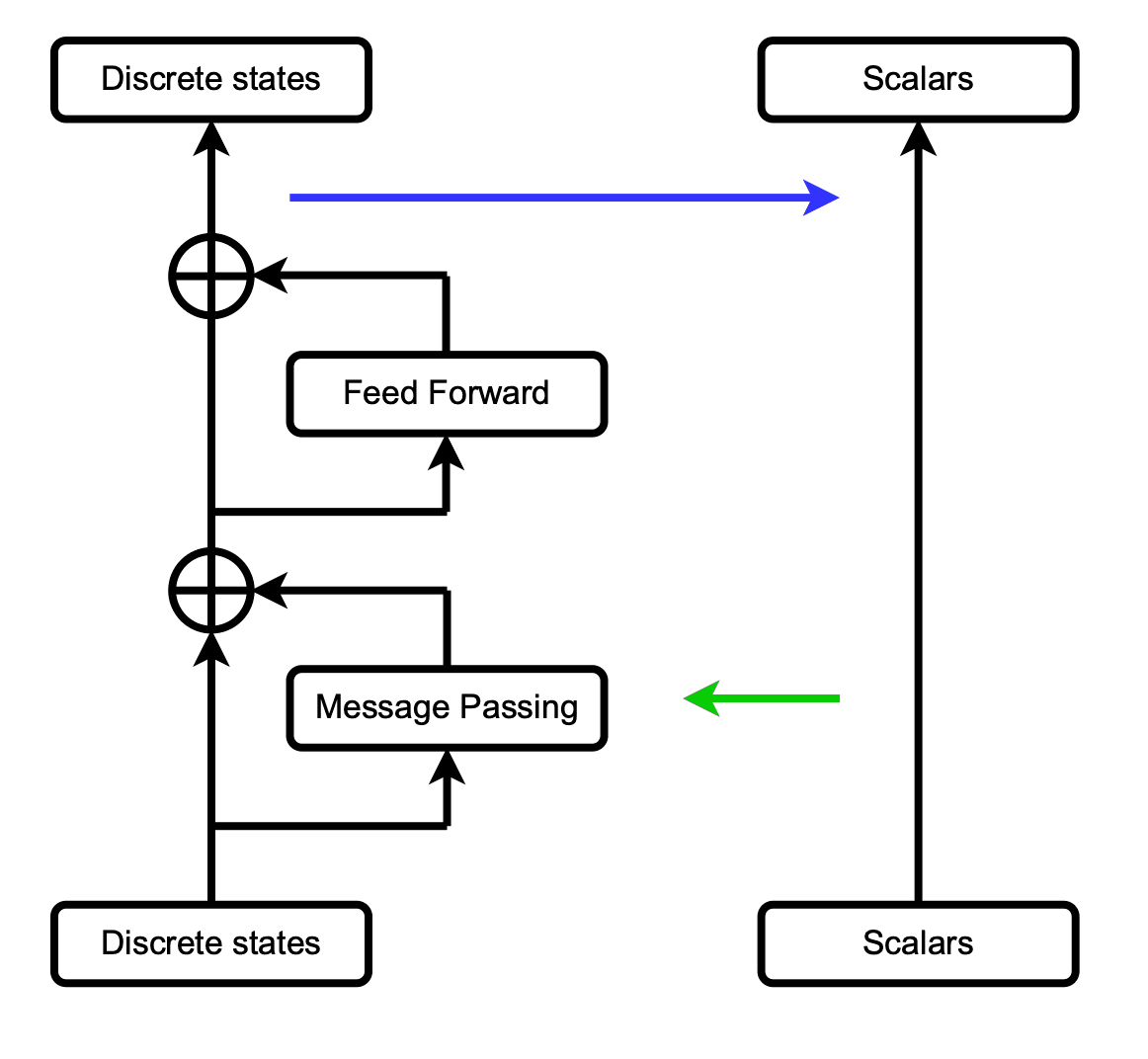

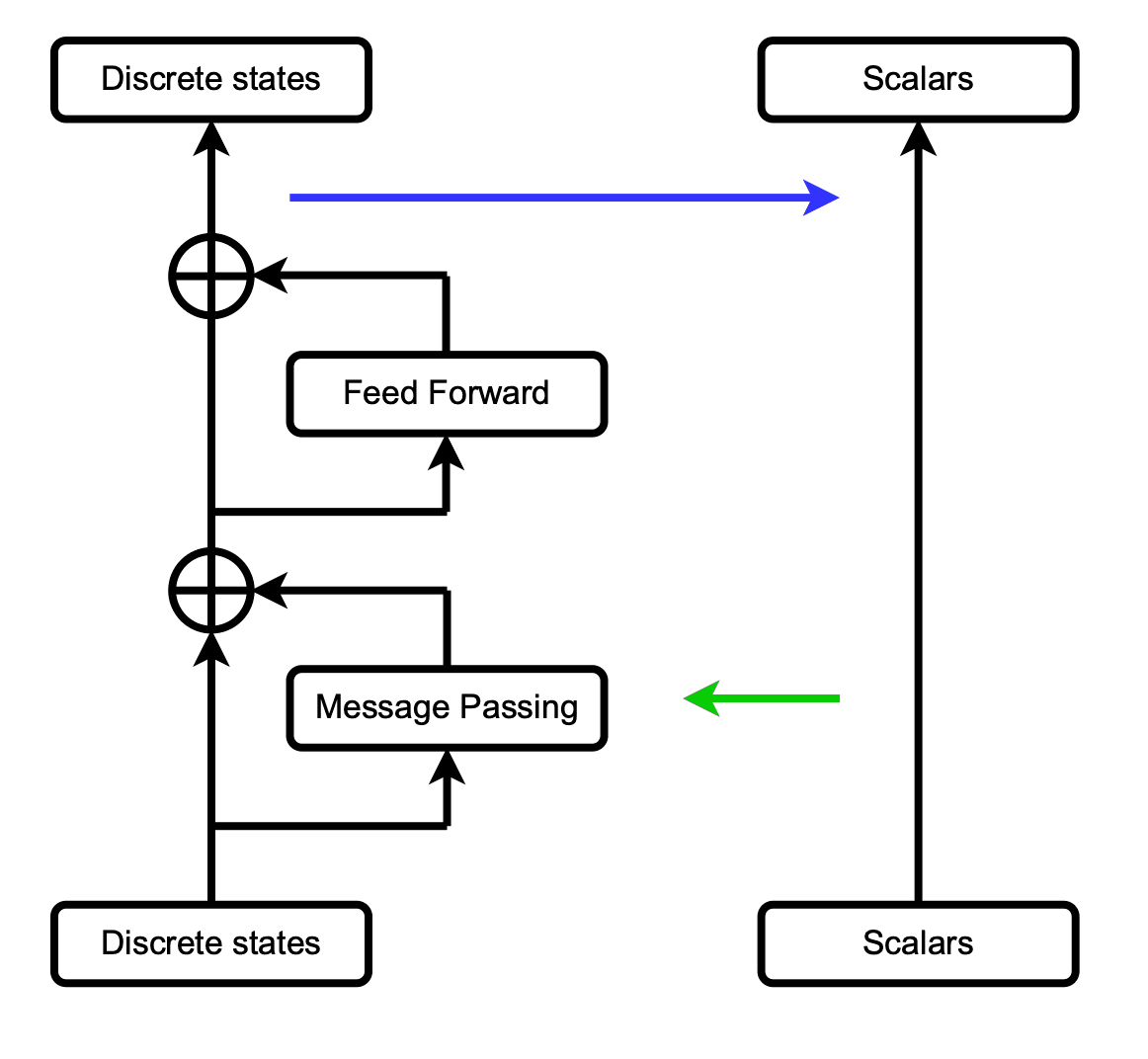

- State discretization does not allow the model to use complex and redundant dependencies in data;

- Hard attention is needed to ensure that attention weights will not be annealed for larger graphs. Also, hard attention limits the set of possible messages that each node can receive;

- Separating discrete and continuous flows is needed to ensure that state discretization does not lose information about continuous data.

Our approach The link has been copied to clipboard

For the BFS problem, we use two node states (Discovered, NotDiscovered) and two edge states (Pointer, NotAPointer). At the initial step, only the starting node has the state Discovered.

For the BFS problem, we use positional information to break ties in traversal order. Each node chooses as a parent the neighbor from the previous distance layer with the smallest index. As graphs can be arbitrarily large, operating with positional information would require infinite precision, so we propose not to discretize it.

For the BFS problem, the proposed selector allows us to exactly perform computations like “for unvisited node select the best visited neighbor”.

Results The link has been copied to clipboard

For the BFS problem, we can inspect the model and verify that the attention of Visited→Unvisited dominates the attention of Unvisited→Unvisited, so, at each step, each unvisited node will receive the message from a visited neighbor if such exists, or from an unvisited node otherwise. Then, by passing the corresponding states to the MLP after the message passing, we can check if the receiver node becomes visited or not regarding the received message, and so on.

Future work The link has been copied to clipboard

References

- Rodionov G. and Prokhorenkova L. “Discrete neural algorithmic reasoning.” 2024.←

- Shi Y. et al. “Masked label prediction: Unified message passing model for semi-supervised classification.” 2020.←

- Weiss G., Goldberg Y., Yahav E. “Thinking like transformers.” ICML 2021.←

- Minder J, Grötschla F, Mathys J, Wattenhofer R. “SALSA-CLRS: A sparse and scalable benchmark for algorithmic reasoning.” LOG 2023.←

- Ibarz B. et al. “A generalist neural algorithmic learner.” LOG 2022.←

- Bohde M., Liu M., Saxton A., Ji S. “On the Markov property of neural algorithmic reasoning: Analyses and methods.” ICLR 2024.←