Papers accepted to ICLR 2026

Six papers by the Yandex Research team (shown in bold below) and our collaborators have been accepted for publication at the International Conference on Learning Representations (ICLR 2026).

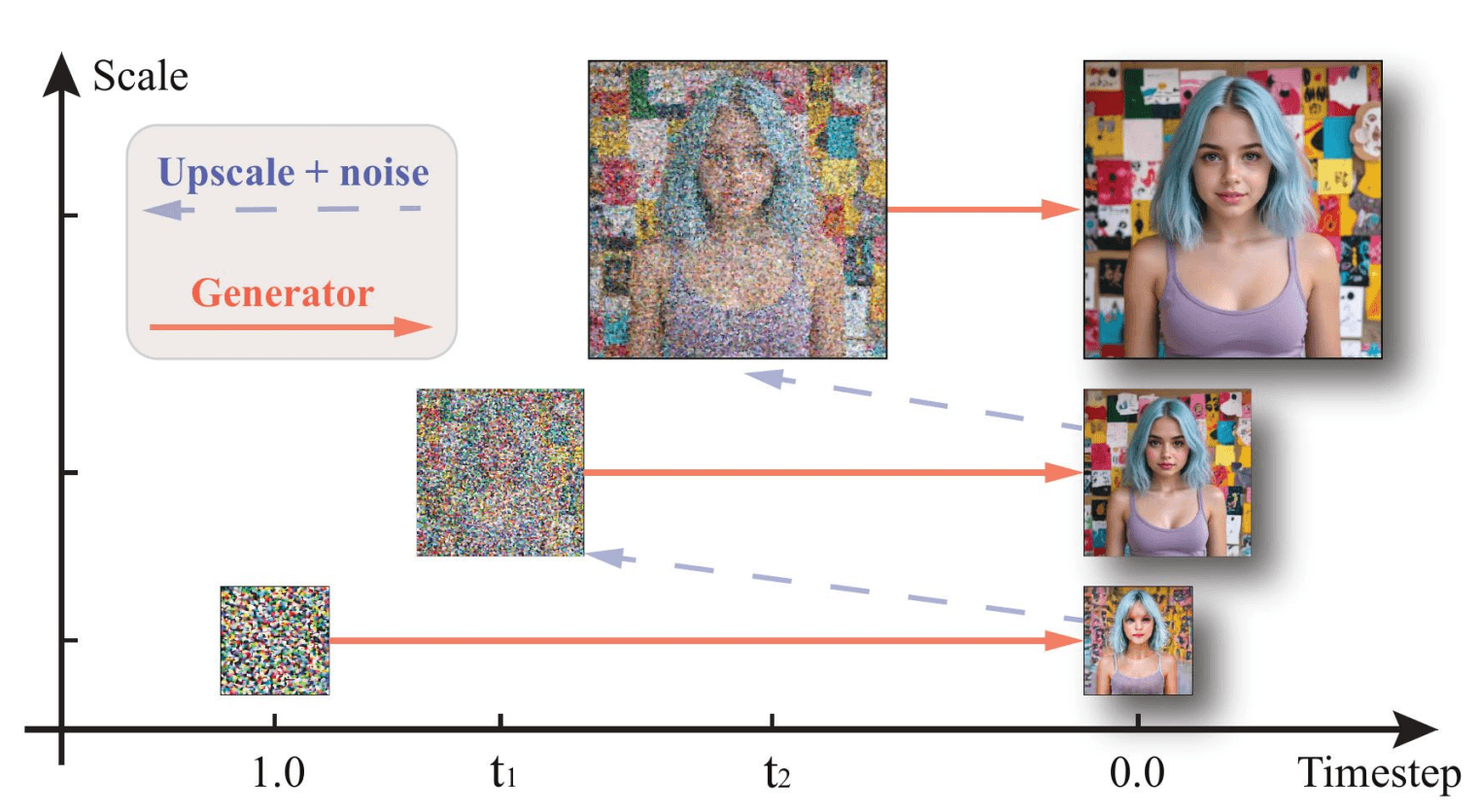

Scale-wise Distillation of Diffusion Models by Nikita Starodubcev, Denis Kuznedelev, Artem Babenko, Dmitry Baranchuk

This work introduces a new scale-wise distillation framework for diffusion models that adapts the idea of next-scale prediction to diffusion-based few-step generators. Specifically, we train the model to generate images progressively, moving from low to high resolution in a few steps. This approach delivers higher quality under the same inference budget compared to fixed-resolution generation.

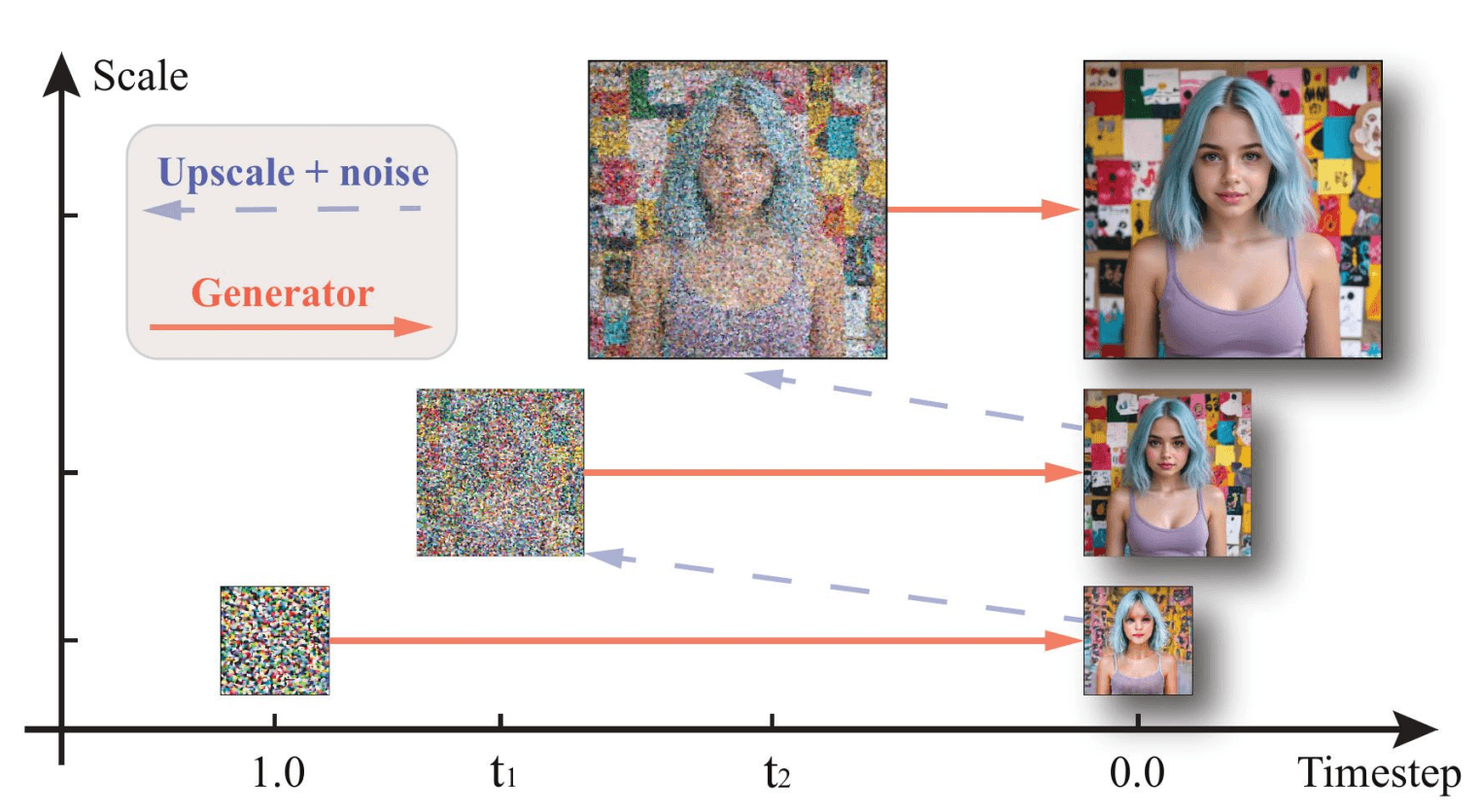

Revisiting Global Text Conditioning in Diffusion Transformers by Nikita Starodubcev, Daniil Pakhomov, Zongze Wu, Ilya Drobyshevskiy, Yuchen Liu, Zhonghao Wang, Yuqian Zhou, Zhe Lin, Dmitry Baranchuk

Diffusion Transformers usually condition on text via (i) attention and (ii) modulation using a pooled text embedding. While recent methods drop modulation and rely solely on attention, this paper examines whether modulation remains necessary and whether it can improve performance.

The authors find that, in its standard form, the pooled embedding adds little: attention alone is typically enough to propagate prompt information. However, when repurposed as a guidance mechanism to steer generation toward desired properties in a controllable way, the pooled embedding yields significant gains. This method is training-free, easy to implement, adds negligible overhead, and improves performance across multiple diffusion tasks, including text-to-image, text-to-video, and image editing tasks.

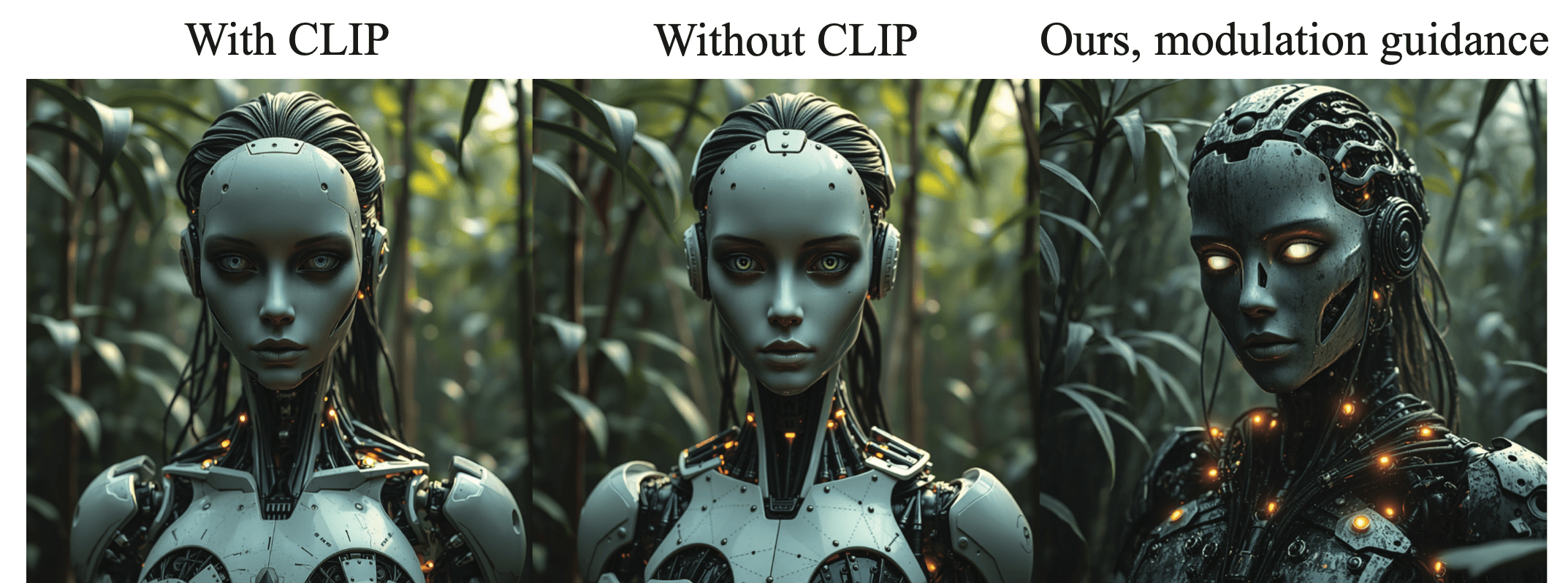

Bridging the Gap Between Promise and Performance for Microscaling FP4 Quantization by Vage Egiazarian, Roberto L. Castro, Denis Kuznedelev, Andrei Panferov, Eldar Kurtic, Shubhra Pandit, Alexandre Marques, Mark Kurtz, Saleh Ashkboos, Torsten Hoefler, Dan Alistarh

In this paper, we present a systematic study of NVIDIA’s recently introduced Microscaling formats (MXFP4, NVFP4) for post-training quantization, revealing gaps between their promise and real-world performance. Our analysis shows that state-of-the-art methods struggle with FP4 because conventional outlier mitigation methods prove ineffective and MXFP4 suffers from large-scale quantization error. We introduce a modified GPTQ algorithm that optimizes the quantization process for the unique properties of FP4, delivering higher accuracy as compared to prior work. Additionally, we provide high-performance inference kernels to support these formats.

Nesterov Finds GRAAL: Optimal and Adaptive Gradient Method for Convex Optimization by Ekaterina Borodich and Dmitry Kovalev

In this paper, we develop the first accelerated, parameter-free gradient method that automatically adapts its stepsize to the local curvature of the objective function at a linear (geometric) rate. We demonstrate the efficiency of the proposed algorithm by proving that it achieves the optimal convergence rate for convex optimization problems under generalized smoothness.

In the paper, we present a unified theoretical analysis of SGD with adaptive preconditioning under matrix smoothness and noise, covering popular optimization algorithms such as AdaGrad-Norm, AdaGrad, and Shampoo. We also present an analysis of an accelerated variant of this method, offering a formal basis to justify the empirical effectiveness of Adam.

Sign-SGD is the Golden Gate between Multi-Node to Single-Node Learning: Significant Boost via Parameter-Free Optimization by Daniil Medyakov, Sergey Stanko, Gleb Molodtsov, Philip Zmushko, Grigoriy Evseev, Egor Petrov, Aleksandr Beznosikov

Pretraining large models is inherently resource-intensive, particularly when it comes to hyperparameter tuning. Most practical approaches rely on heuristic learning rate schedules discovered via grid search, as choosing an optimal stepsize requires knowing the smoothness constant — a value that remains unknown for real-world tasks. In this work, we introduce a parameter-free optimization method based on Sign-SGD. We develop an approach (specifically the ALIAS algorithm) that automatically adapts stepsizes during training to achieve performance competitive with meticulously tuned baselines, effectively eliminating the need for extensive hyperparameter search.