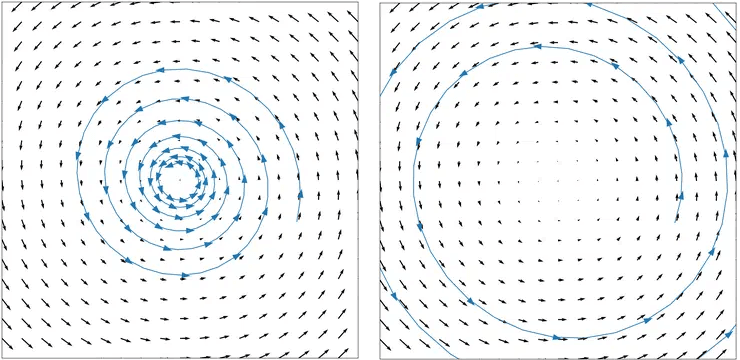

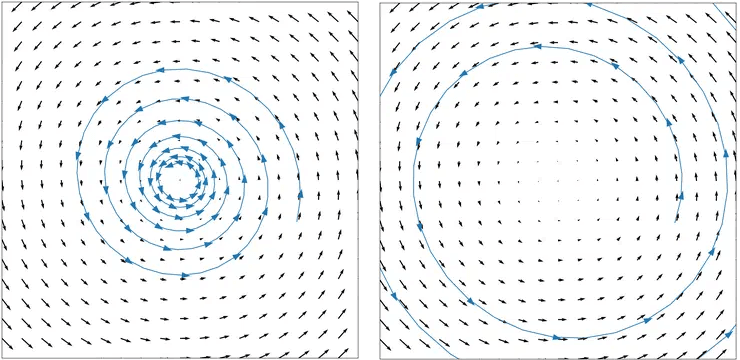

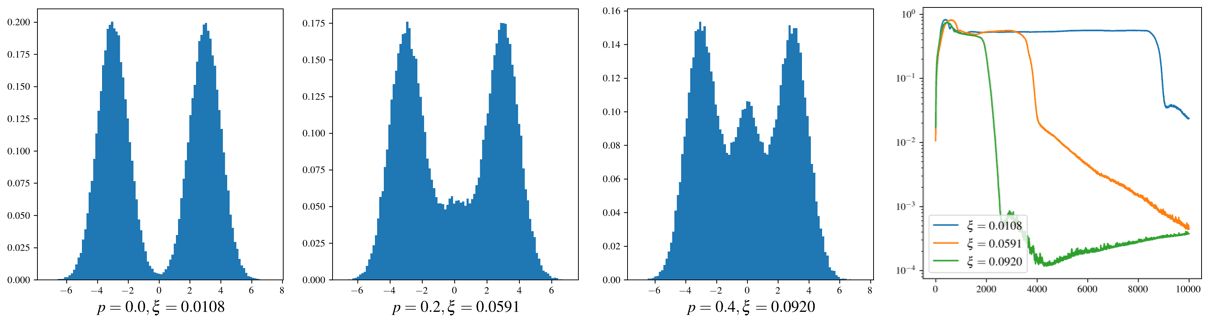

Figure taken from Training Generative Adversarial Networks by Solving Ordinary Differential Equations

@inproceedings{khrulkov2021functional,

title={Functional Space Analysis of Local GAN Convergence},

author={Khrulkov, Valentin and Babenko, Artem and Oseledets, Ivan},

booktitle={International Conference on Machine Learning},

year={2021}, organization={PMLR}

}